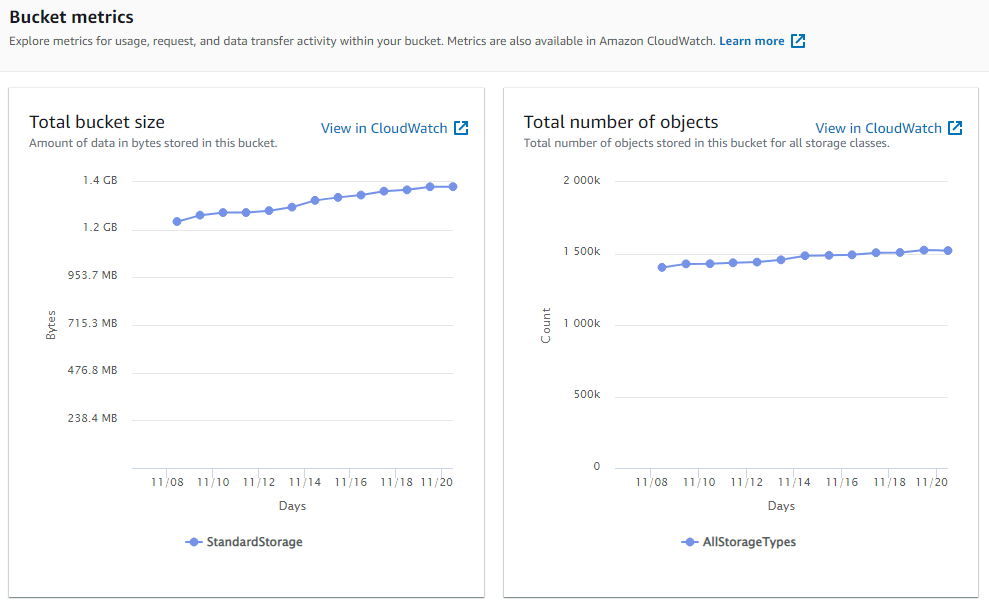

Like many websites and mobile apps, www.signbsl.com hosts its static media files using S3, which provides a reliable service without managing servers. The bucket currently holds more than 126,000 images and videos. On a self hosted solution to find missing files I would usually use the Apache Access Logs. But with S3 you need to use Server access logging to get this information.

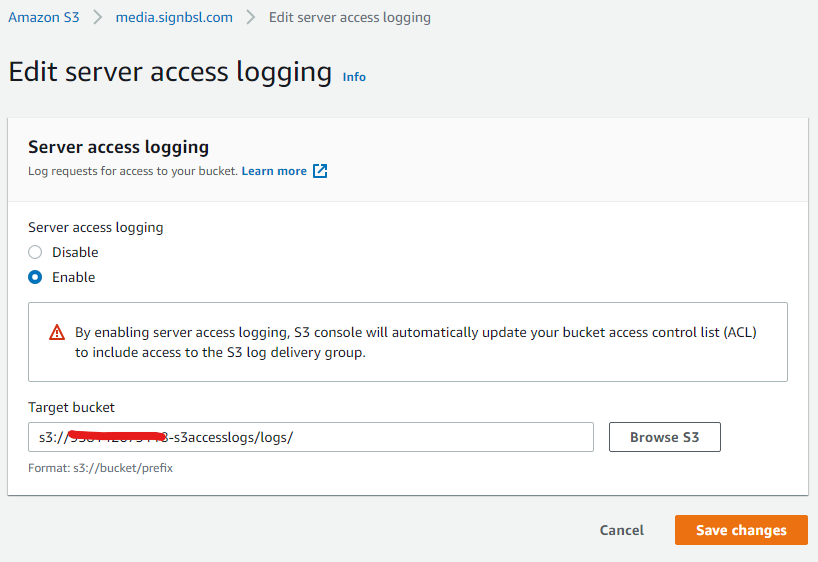

Go to your bucket, then Properties and then Edit Server Access Logging. Here you can enable the access logging and specify which bucket to store the logs in.

I use a bucket prefixed with the account name (this helps me find which account a bucket is in).

But with millions of requests each month, the S3 logging will generated millions of logging files in this logging bucket. So edit this logging bucket and under Management configure a Lifecycle rule that deletes objects older than 21 days (or maybe less). For this project this keeps the number of files to a more manageable number.

With logging turned on, we need to be able to report on just requests that result in a 404 status code. This report should return the missing files that are most frequently requested so that we can prioritise our efforts to fixing the files that will have the most benefit.

I have a handy CloudFormation template that will do just this and email this report to your chosen email address.

AWSTemplateFormatVersion: 2010-09-09

Description: >-

Email Athena result

Parameters:

BucketName:

Type: String

Description: S3 Bucket Name where Athena results should be outputted to. Must be lowercase

AthenaQuery:

Type: String

Description: Athena Query to run. Ensure all quotes are escaped!

AthenaDatabase:

Type: String

Description: Athena Database to use.

ScheduleCronExpression:

Type: String

Description: Cron Expression for when the Athena report should be run e.g. 10am every Monday 0 10 ? * MON * see https://docs.aws.amazon.com/AmazonCloudWatch/latest/events/ScheduledEvents.html (Note this is UTC time)

EmailToAddress:

Type: String

Description: Address email should be sent to.

EmailFromAddress:

Type: String

Description: Address email should be sent from. This must be setup in SES.

EmailSubject:

Type: String

Description: Email subject.

Resources:

AthenaResultBucket:

Type: AWS::S3::Bucket

DependsOn: [ 'EmailReportFunction' ]

Properties:

BucketName: !Sub "${BucketName}"

AccessControl: BucketOwnerFullControl

PublicAccessBlockConfiguration:

BlockPublicAcls: true

BlockPublicPolicy: true

IgnorePublicAcls: true

RestrictPublicBuckets: true

NotificationConfiguration:

LambdaConfigurations:

- Event: 's3:ObjectCreated:*'

Filter:

S3Key:

Rules:

- Name: suffix

Value: .csv

Function: !GetAtt EmailReportFunction.Arn

LifecycleConfiguration:

Rules:

- Id: DeleteContentAfter30Days

Status: 'Enabled'

ExpirationInDays: 30

LambdaExecutionRole:

Type: AWS::IAM::Role

Properties:

AssumeRolePolicyDocument:

Version: '2012-10-17'

Statement:

- Effect: Allow

Principal: {Service: [lambda.amazonaws.com]}

Action: ['sts:AssumeRole']

Path: /

ManagedPolicyArns:

- arn:aws:iam::aws:policy/service-role/AWSLambdaBasicExecutionRole # Grant access to log to CloudWatch

- arn:aws:iam::aws:policy/AmazonAthenaFullAccess # Grant access to use Athena

- arn:aws:iam::aws:policy/AmazonS3FullAccess # Grant full access to S3 both to store results and also to query S3 data. This could be made more restrictive as it should only have access to the source data and output location. see: https://aws.amazon.com/premiumsupport/knowledge-center/access-denied-athena/

Policies:

- PolicyName: SendRawEmail

PolicyDocument:

Version: '2012-10-17'

Statement:

- Effect: Allow

Action:

- 'ses:SendRawEmail'

Resource: ['*']

LogsLogGroup:

Type: AWS::Logs::LogGroup

Properties:

LogGroupName: !Sub "/aws/lambda/${RunAthenaQueryFunction}"

RetentionInDays: 7

RunAthenaQueryFunction:

Type: AWS::Lambda::Function

Properties:

Role : !GetAtt LambdaExecutionRole.Arn

Runtime: "python3.8"

MemorySize: 128

Timeout: 10

Handler: index.lambda_handler

Code:

ZipFile: !Sub |

import time

import boto3

query = '${AthenaQuery}'

DATABASE = '${AthenaDatabase}'

output='s3://${AthenaResultBucket}/'

def lambda_handler(event, context):

client = boto3.client('athena')

# Execution

response = client.start_query_execution(

QueryString=query,

QueryExecutionContext={

'Database': DATABASE

},

ResultConfiguration={

'OutputLocation': output,

}

)

return response

return

RunAthenaQueryEventRule:

Type: AWS::Events::Rule

Properties:

Description: 'Will call the lambda function to produce the Athena Report.'

ScheduleExpression: !Sub "cron(${ScheduleCronExpression})"

State: 'ENABLED'

Targets:

-

Arn: !GetAtt 'RunAthenaQueryFunction.Arn'

Id: RunAthenaQuery

RunAthenaQueryCloudWatchEventPermission:

Type: "AWS::Lambda::Permission"

Properties:

Action: 'lambda:InvokeFunction'

FunctionName: !GetAtt 'RunAthenaQueryFunction.Arn'

Principal: 'events.amazonaws.com'

SourceArn: !GetAtt 'RunAthenaQueryEventRule.Arn'

EmailReportLogsLogGroup:

Type: AWS::Logs::LogGroup

Properties:

LogGroupName: !Sub "/aws/lambda/${EmailReportFunction}"

RetentionInDays: 7

EmailReportFunction:

Type: AWS::Lambda::Function

Properties:

Role : !GetAtt LambdaExecutionRole.Arn

Runtime: "python3.7"

MemorySize: 128

Timeout: 20

Handler: index.lambda_handler

Code:

ZipFile: !Sub |

import os.path

import boto3

import email

from botocore.exceptions import ClientError

from email.mime.multipart import MIMEMultipart

from email.mime.text import MIMEText

from email.mime.application import MIMEApplication

s3 = boto3.client("s3")

def lambda_handler(event, context):

print(event)

# Note: This code does not seem to handle encoded characters very well. e.g. if the s3 key name contains a backet etc.

SENDER = "${EmailFromAddress}"

RECIPIENT = "${EmailToAddress}"

AWS_REGION = "${AWS::Region}"

SUBJECT = "${EmailSubject}"

FILEOBJ = event["Records"][0]

BUCKET_NAME = str(FILEOBJ['s3']['bucket']['name'])

KEY = str(FILEOBJ['s3']['object']['key'])

FILE_NAME = os.path.basename(KEY)

TMP_FILE_NAME = '/tmp/' + FILE_NAME

s3.download_file(BUCKET_NAME, KEY, TMP_FILE_NAME)

ATTACHMENT = TMP_FILE_NAME

BODY_TEXT = "The Object file was uploaded to S3"

client = boto3.client('ses',region_name=AWS_REGION)

msg = MIMEMultipart()

# Add subject, from and to lines.

msg['Subject'] = SUBJECT

msg['From'] = SENDER

msg['To'] = RECIPIENT

textpart = MIMEText(BODY_TEXT)

msg.attach(textpart)

att = MIMEApplication(open(ATTACHMENT, 'rb').read())

att.add_header('Content-Disposition','attachment',filename=ATTACHMENT)

msg.attach(att)

print(msg)

try:

response = client.send_raw_email(

Source=SENDER,

Destinations=[RECIPIENT],

RawMessage={ 'Data':msg.as_string() }

)

except ClientError as e:

print(e.response['Error']['Message'])

else:

print("Email sent! Message ID:",response['MessageId'])

RunEmailAthenaResultS3EventPermission:

Type: "AWS::Lambda::Permission"

Properties:

Action: 'lambda:InvokeFunction'

FunctionName: !GetAtt 'EmailReportFunction.Arn'

Principal: 's3.amazonaws.com'

SourceAccount: !Ref 'AWS::AccountId'

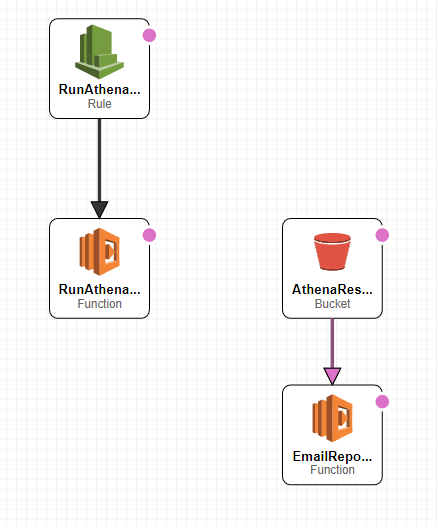

SourceArn: !Sub 'arn:aws:s3:::${BucketName}'This CloudFormation template will create a S3 bucket to store the Athena Search results. Create a Lambda Function that will make the Athena search request that is triggered by an EventBridge rule. The S3 Bucket will have an Event Notification setup so that when the Athena Query saves its CSV search result file into the bucket it will then call another Lambda function which will send the email out as an attachment.

I have the CloudFormation template configured with the following query:

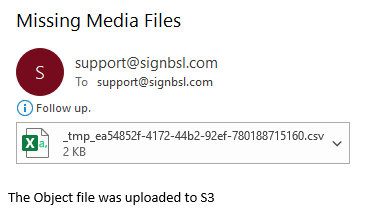

SELECT COUNT (DISTINCT requestdatetime) AS count, key FROM s3_access_logs_db.media_signbsl_logs WHERE (httpstatus != \'200\' AND httpstatus != \'304\' AND key NOT LIKE \'%wlwmanifest.xml\') GROUP BY key ORDER BY count DESC Limit 50

Which gives me the top 50 missing files, ignoring any requests for wlwmanifest.xml

With a Cron schedule of 0 10 ? * MON * which means I receive an email report every Monday at 10am with a list of files I need to resolve.

In terms of cost, the report runs 4 times a month, which results in a $0.02 fee for Athena use. The larger cost comes from S3 as Athena has to scan through hundreds of thousands of small S3 logging files. In this example the S3 GET costs are ~$2.00 per month.